Earthformer: Exploring Space-Time Transformers for Earth System Forecasting

Zhihan Gao2*, Xingjian Shi1**, Hao Wang3, Yi Zhu1, Yuyang Wang1, Mu Li1, Dit-Yan Yeung2

1Amazon Web Services, 2Hong Kong University of Science and Technology, 3Rutgers University

*Work done while being an intern at Amazon Web Services. **Contact person.

Abstract

Conventionally, Earth system (e.g., weather and climate) forecasting relies on numerical simulation with complex physical models and hence is both expensive in computation and demanding on domain expertise. With the explosive growth of spatiotemporal Earth observation data in the past decade, data-driven models that apply Deep Learning (DL) are demonstrating impressive potential for various Earth system forecasting tasks. The Transformer as an emerging DL architecture, despite its broad success in other domains, has limited adoption in this area. In this paper, we propose Earthformer, a space-time Transformer for Earth system forecasting. Earthformer is based on a generic, flexible and efficient space-time attention block, named Cuboid Attention. The idea is to decompose the data into cuboids and apply cuboid-level self-attention in parallel. These cuboids are further connected with a collection of global vectors. We conduct experiments on the MovingMNIST dataset and a newly proposed chaotic N-body MNIST dataset to verify the effectiveness of cuboid attention and figure out the best design of Earthformer. Experiments on two real-world benchmarks about precipitation nowcasting and El Niño/Southern Oscillation (ENSO) forecasting show that Earthformer achieves state-of-the-art performance.

Problem Overview

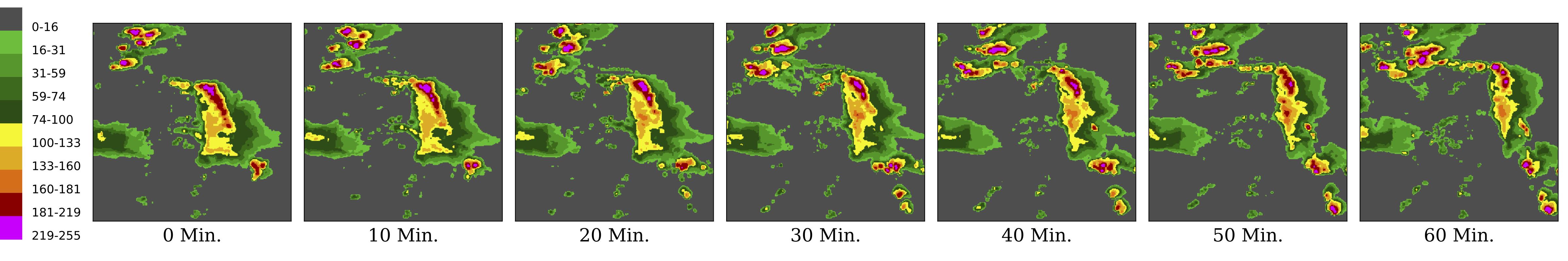

The Earth is chaotic, high-dimensional, spatiotemporal and hence a complex system (See the following figure for an example of Earth observation data: an example Vertically Integrated Liquid (VIL) observation sequence from the Storm EVent ImageRy (SEVIR) dataset). Improving forecasting models for the variabilities of Earth has a huge socioeconomic impact. E.g., it can help people take necessary precautions to avoid crises, or better utilize natural resources such as wind and solar energy.

We formulate Earth system forecasting as a spatiotemporal sequence forecasting problem. The Earth observation data, such as radar echo maps from NEXRAD and climate data from CIMP6 , are represented as a spatiotemporal sequence. Based on these observations, the model predicts the K-step-ahead future.

Method

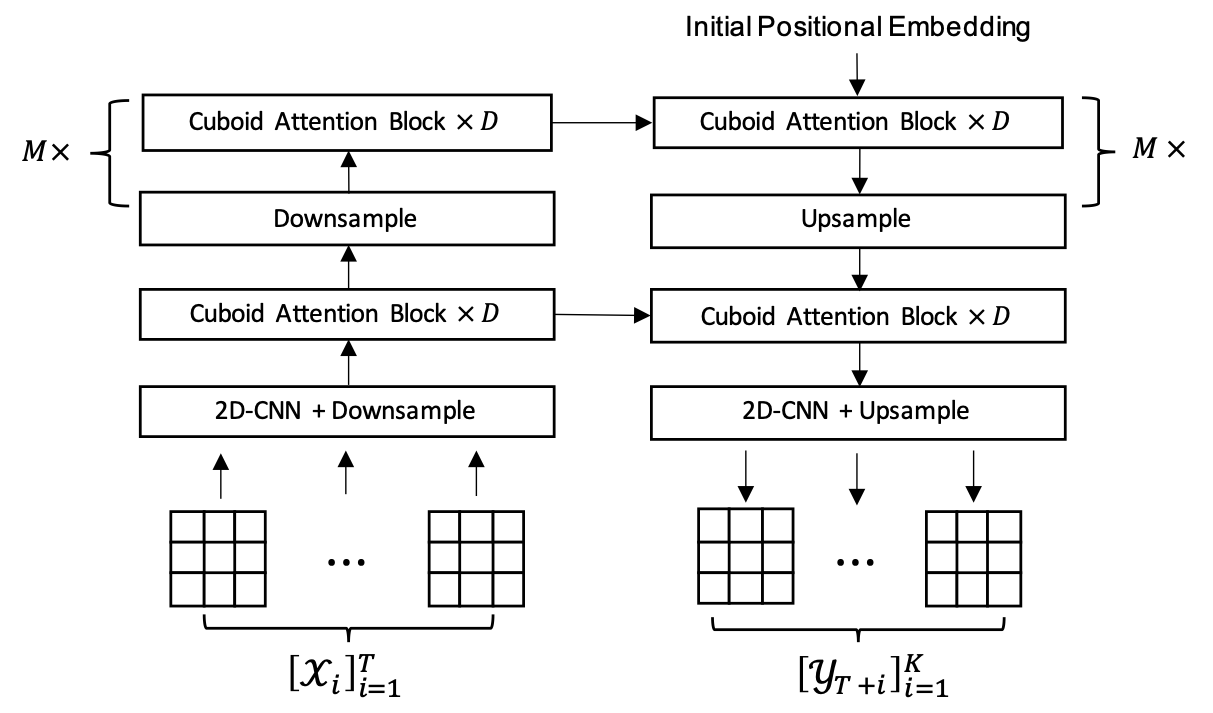

We propose Earthformer, which is a hierarchical Transformer encoder-decoder based on Cuboid Attention. The input observations are encoded as a hierarchy of hidden states and then decoded to the prediction target.

Architecture

As illustrated in the following figure, the input sequence has length T and the target sequence has length K. “x D” means to stack D cuboid attention blocks with residual connection. “M x” means to have M layers of hierarchies.

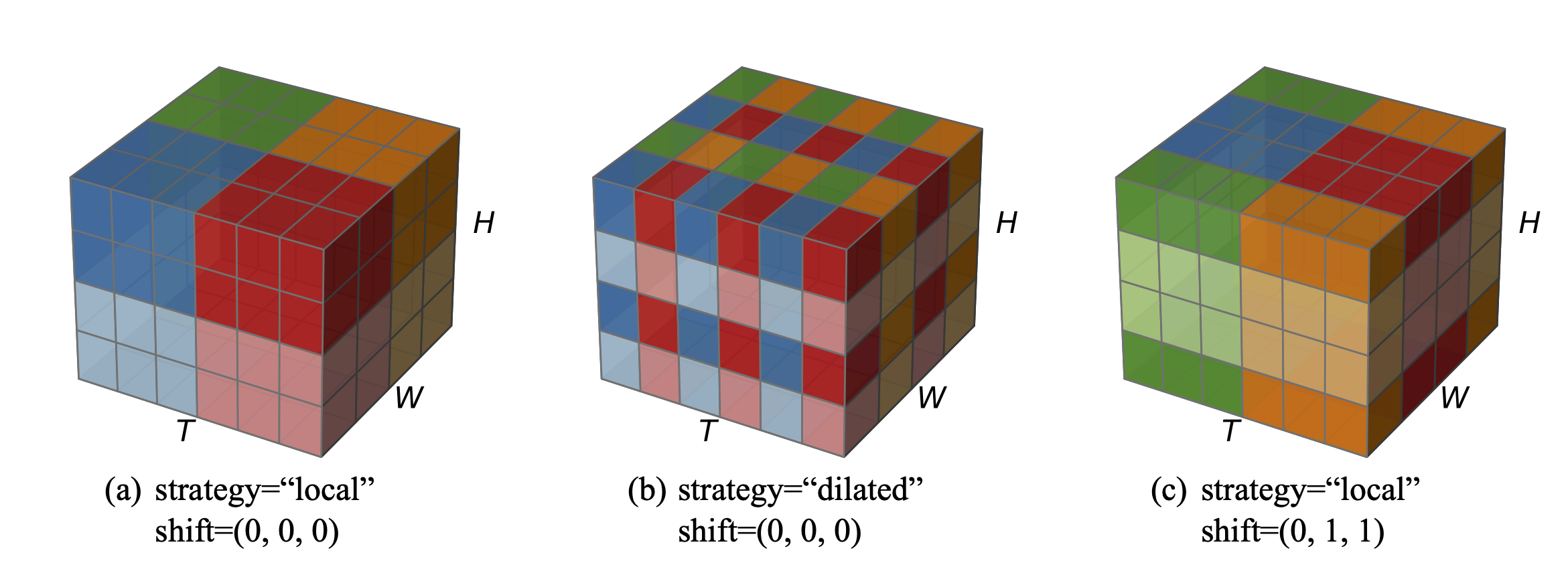

Cuboid Attention

We propose the generic cuboid attention layer that involves three steps: “decompose”, “attend”, and “merge”. In addition, we propose to introduce a collection of P global vectors to help cuboids scatter and gather crucial global information.

By stacking multiple cuboid attention layers with different choices of “cuboid_size”, “strategy” and “shift”, we are able to efficiently explore existing and potentially more effective space-time attention.

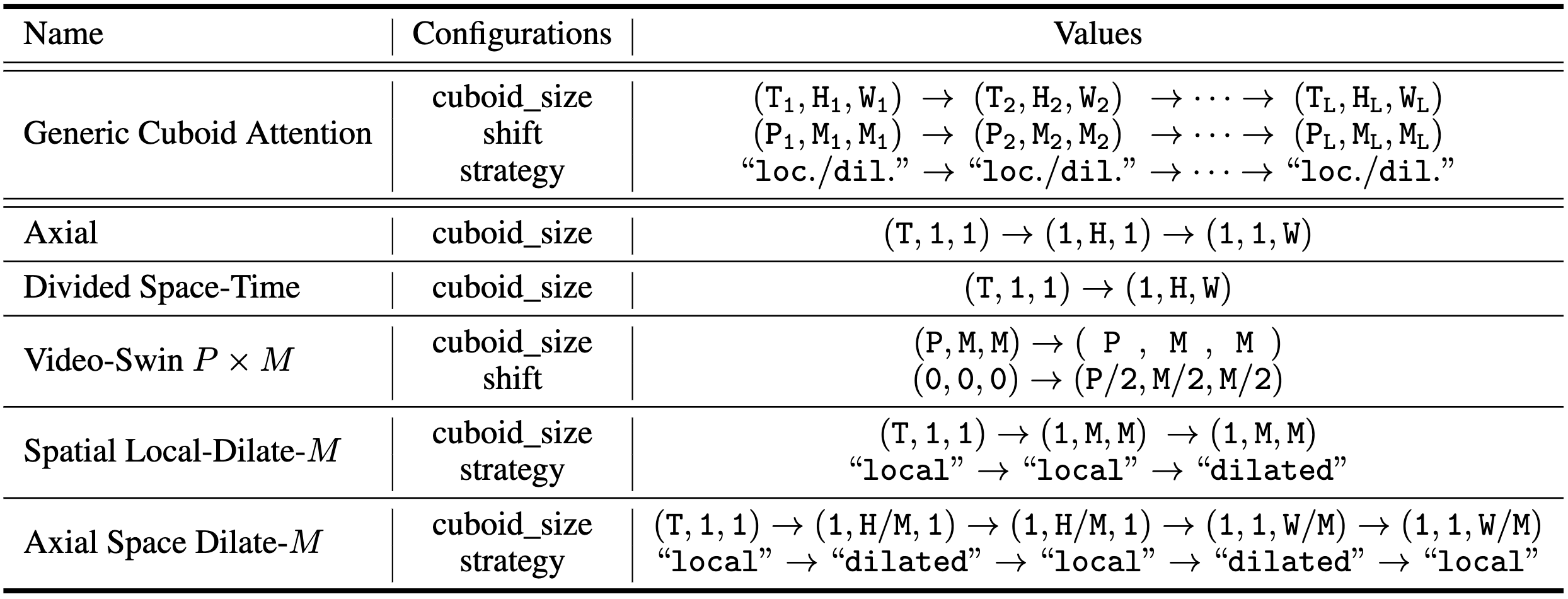

The following table lists the configurations of the cuboid attention patterns we explored. The first row shows the configuration of the generic cuboid attention. If “shift” or “strategy” is not given, we use shift=(0, 0, 0) and strategy=”local” by default. When stacking multiple cuboid attention layers, each layer will be coupled with layer normalization layers and feed-forward network as in the Pre-LN Transformer.

Experiments

MovingMNIST

We follow Unsupervised Learning of Video Representations using LSTMs (ICML2015) to use the public MovingMNIST dataset.

N-body MNIST

We extend MovingMNIST to a more challenging chaotic N-body MNIST by adding long-range and non-linear gravitational interactions among moving digits:

Access to our N-body MNIST dataset:

- Download the N-body MNIST dataset used in our paper from AWS S3.

- Generate your custom N-body MNIST dataset using our script and following the instructions.

The following figure illustrates the chaos in N-body MNIST: the effect of a slight disturbance on the initial velocities is much more significant on N-body MNIST than on MovingMNIST. The top half are two MovingMNIST sequences, where their initial conditions only slightly differ in the the initial velocities. The bottom half are two N-body MNIST sequences. N-body MNIST sequence 1 has exactly the same initial condition as MovingMNIST sequence 1. N-body MNIST sequence 2 has exactly the same initial condition as MovingMNIST sequence 2. The final positions of digits in MovingMNIST after 20 steps evolution only slightly differ from each other, while the differences are much more significant in the final frames of N-body MNIST sequences.

Earthformer is able to more accurately predict the position of the digits with the help of global vectors. On the contrary, none of the baseline algorithms that achieved solid performance on MovingMNIST gives the correct and precise position of the digit “0” in the last frame.

SEVIR

Storm EVent ImageRy (SEVIR) benchmark supports scientific research on multiple meteorological applications including precipitation nowcasting, synthetic radar generation, front detection, etc. We adopt SEVIR for benchmarking precipitation nowcasting, i.e., to predict the future VIL up to 60 minutes (12 frames) given 65 minutes context VIL (13 frames).

Checkout the public leaderboard of SEVIR on Papers With Code:

ICAR-ENSO

Dataset available at TIANCHI.

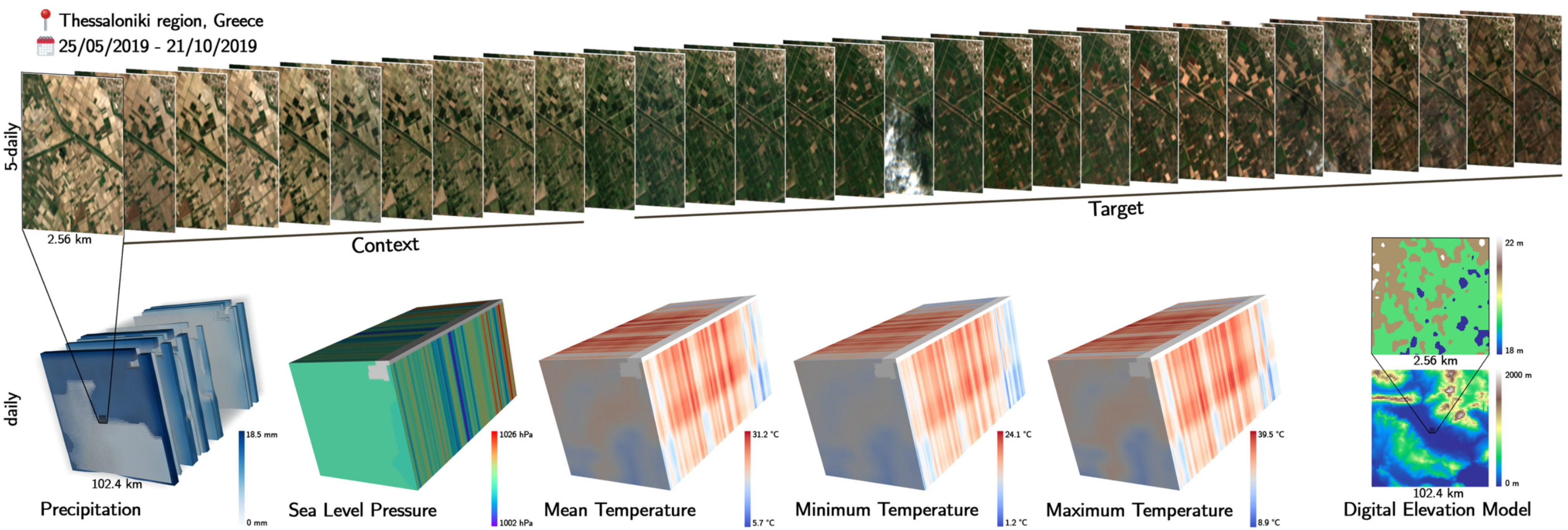

EarthNet2021

EarthNet2021 is a dataset and challenge for the task of Earth surface forecasting.

Checkout the official leaderboard of EarthNet2021 and the leaderboard publicly available on Papers With Code:

BibTeX

@inproceedings{gao2022earthformer,

title={Earthformer: Exploring Space-Time Transformers for Earth System Forecasting},

author={Gao, Zhihan and Shi, Xingjian and Wang, Hao and Zhu, Yi and Wang, Yuyang and Li, Mu and Yeung, Dit-Yan},

booktitle={NeurIPS},

year={2022}

}